The Many Ways Planned Obsolescence Is Sabotaging How We Preserve Internet History

Credit to Author: Ernie Smith| Date: Fri, 06 Sep 2019 12:54:18 +0000

A version of this post originally appeared on Tedium , a twice-weekly newsletter that hunts for the end of the long tail.

The world of technology has a problem, and it’s not something that we’re talking about nearly enough. That problem? We keep making old stuff significantly less useful in the modern day, sometimes by force.

We cite problems such as security, maintenance, and a devotion to constant evolution as reasons for allowing this to happen.

But the net effect is that we are making it impossible to continue using otherwise useful things after even a medium amount of time. I’m not even exclusively talking about things that are decades old. Sometimes, just a few years does the trick.

A quick case in point: Google has a set date for every type of Chromebook architecture to fall into an “end of life” status, where it will no longer be developed or updated, despite the fact that it’s effectively the modern version of a dumb terminal.

And the timeframe is surprisingly short—just 6.5 years from the first use of the architecture, the machine will stop auto-updating, despite the fact an equivalent Windows machine will still be usable for years after that point.

There are some workarounds, including Linux distributions made specifically for Chromebooks, but the result is that computers that would still be completely usable with other operating systems are going to be pushed off the internet onramp in a few years’ time.

And that’s just but one example. I have many more to share, and together, they paint a pretty dark picture for preservationists looking to capture a snapshot of time.

*

— Josh Malone, a vintage computer enthusiast, making the argument that old computers must have their batteries removed, in part because of how easily they get corroded and damaged, thereby further damaging the components inside of the machine. Even with changes in technology, with batteries more deeply embedded in the phones and laptops we buy, it‘s inevitable that this problem will only worsen over time.

We create devices that have batteries built to die

I have a lot of old gadgets floating around my house these days, partly out of personal interest in testing things out, in hope of writing stories about the things that I find. Some of this stuff is on loan and requires extra research that simply takes time due to the huge amount of complexity involved. Other times, it’s just an artifact that I think allows for telling an interesting story. I make weekly trips to Goodwill hoping to find the next interesting story in a random piece of what some might call junk.

But one issue keeps cropping up that I think is going to become even more prevalent in the years to come: Non-functional batteries.

Battery technology and the circuitry that connects to it varies wildly, and it creates issues that prevent gadgets from living their best lives, in a huge part due to the slow decay of lithium-ion batteries.

A prominent example of this, of course, are AirPods, highly attractive and functional tools that will slowly become less useful over time as their batteries go through hundreds of cycles and start to lose steam. But at the same time, AirPods are just an example of what is destined to happen to basically every set of Bluetooth headphones over time: The lithium-ion batteries driving them will slowly decay and turn a once-useful product into an object that must be continually replaced because a single part, the battery, cannot be replaced.

Older devices I’ve been testing out have batteries so old that I cannot find replacements for them. (You’ve not lived until you’ve typed in a serial code for an old battery into Google and found out that the only search result for that serial code goes to a museum’s website.) But newer batteries, despite being reliant on basically the same technology year after year, can’t be easily replaced, leading these old batteries to decay with little room for user recourse.

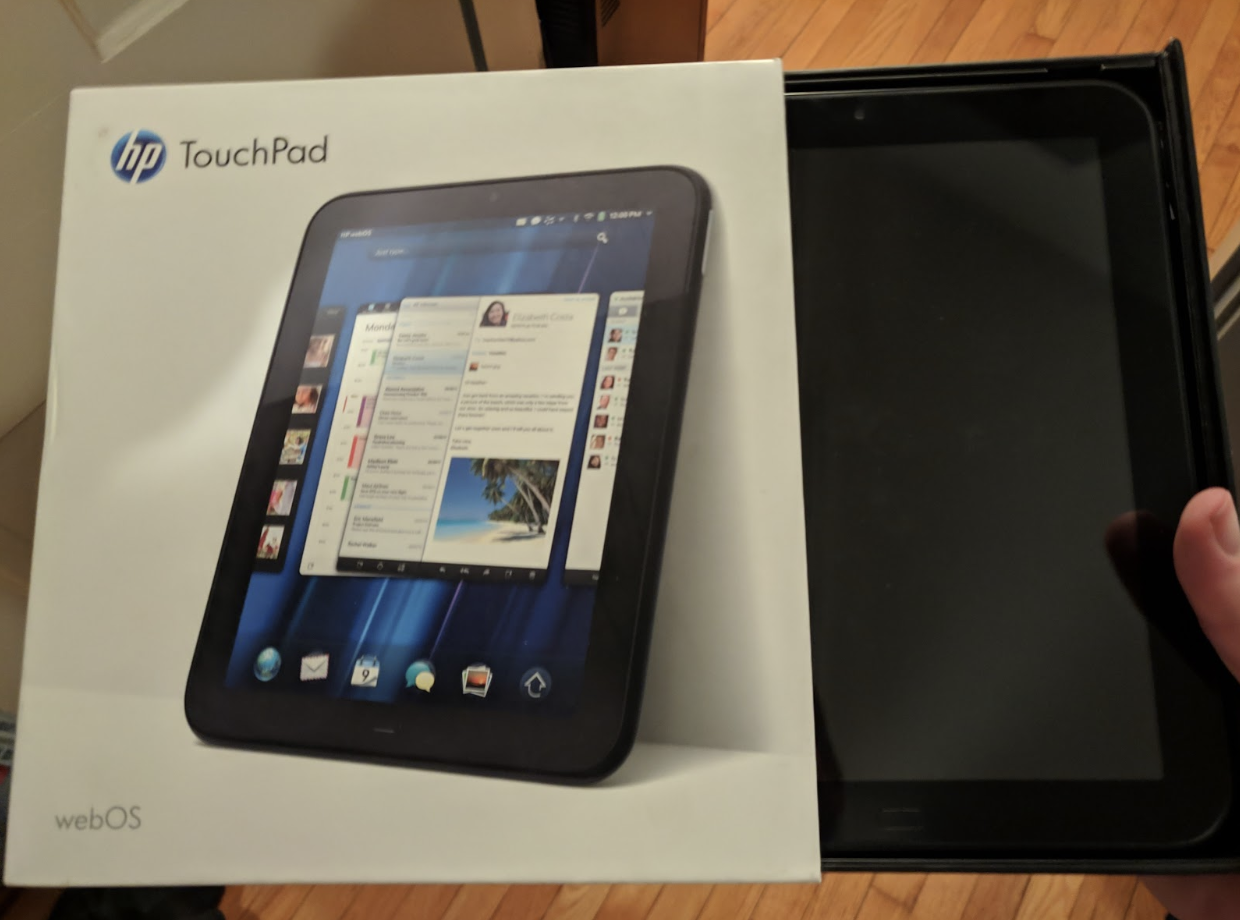

One example I’d like to bring to light in this context is the HP TouchPad, the WebOS-based tablet the company briefly sold during a period that it cared about the fate of WebOS. It’s an interesting story, full of intrigue and even present-day Android updates.

Simply put: Many of them will not charge up after being set aside for a while. Including mine. I knew this going in, but I thought it would be fun to see if I could pull off a desperate challenge.

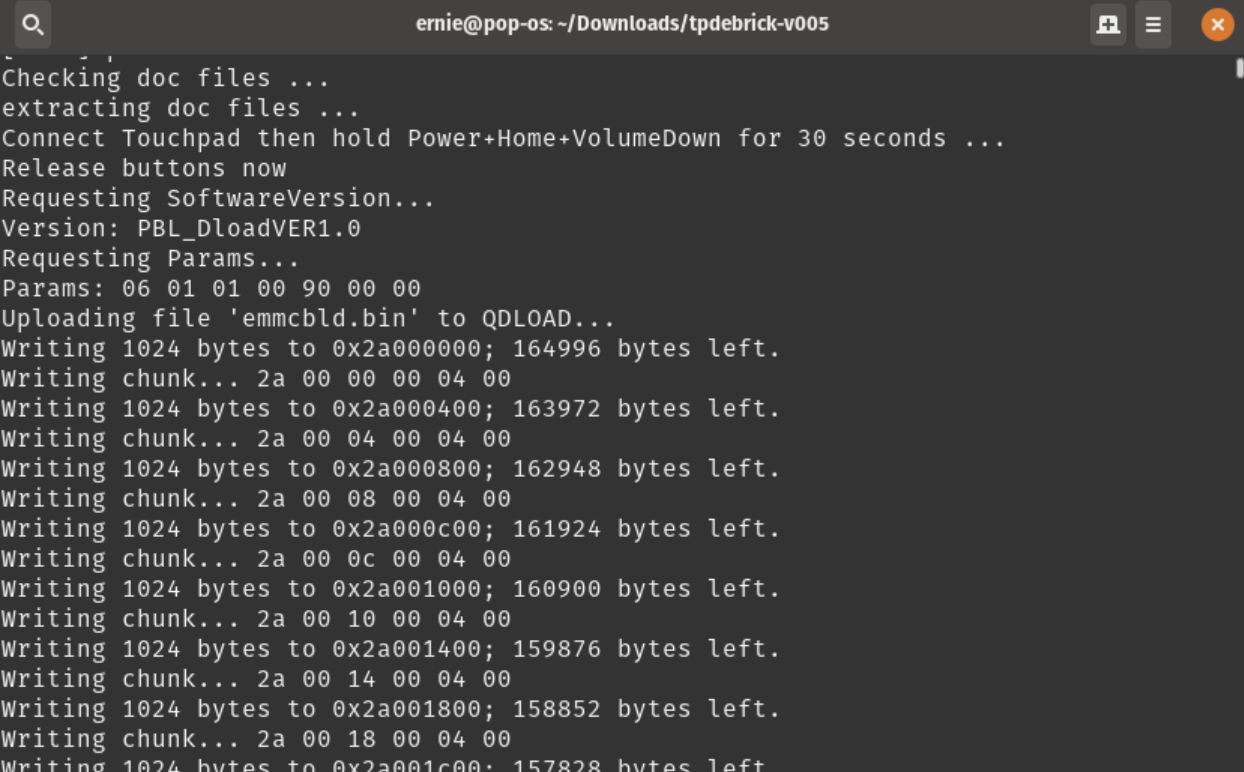

But even though the device can be detected by another computer—plugging it into a Linux machine and running a few Terminal commands makes it clear that the device is not only detected, but capable of being reimaged—HP made some mistakes with its design of the battery on the TouchPad, and now if a device has been left uncharged for even a modestly extended period, the odds are good that the device is probably dead. Even when the device was relatively new charging issues were common.

The TouchPad is only eight years old, a historic novelty due to only being sold for a total of 49 days and with an OS that had a cult following. But design flaws mean that it’s somewhat rare to find a working device in the wild, and revival is often a confusing mess of old pages in the Internet Archive and obscure Linux scripts that, in the right context, might just work.

I haven’t given up on mine, but I’ve read forum posts where some users have taken to putting their old TouchPads inside of an electric blanket, all for the cause of “heating up” the battery for just long enough that they can run the Linux reimaging script and get the machine to boot and charge.

Putting a heating pad around a dead HP TouchPad reeks of desperation, but considering it’s a nice if unloved tablet, desperate times call for weird tactics.

But it’s not the only somewhat recent device I’ve tried that seems to have odd battery-charging habits. I recently revived an old iPhone 6 Plus basically to keep a backup of my iOS data, and I find that the battery drains on its own, even when I’m not using it, and a cheap Nuvision tablet designed for Windows 10 that I got for a steal has a tendency of being finicky about the kind of power outlets it likes and how long it will keep a charge.

The connecting thread of these devices is that they’re not designed to be cracked open or repaired by users. But if we plan to keep them around into the present day, they have to be. Otherwise we’re dealing with millions of nonfunctional bricks.

We make no effort to allow vintage computers to live on the modern internet

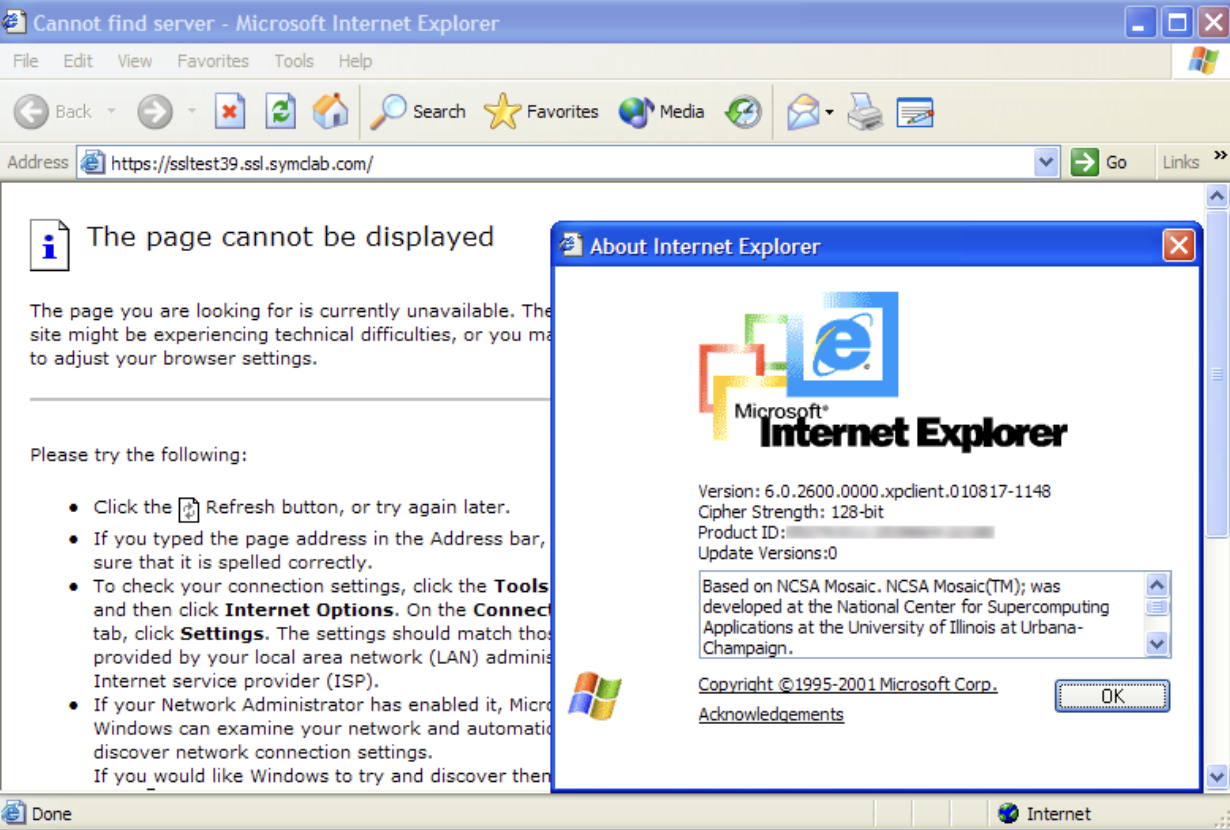

A decade ago, the biggest problem facing the internet was the large number of compatibility issues baked into Internet Explorer, a web browser that infamously stopped evolving for about half a decade, creating major issues that took years to sort out.

We’re long past that point, but I’d like to argue that, in many ways, we’ve created the opposite problem.

Now, our browsers are more standards-compliant than ever. But this has created an entire class of software, built on old operating systems, to have a degraded experience.

The problem comes down to security standards such as Transport Layer Security and the older Secure Sockets Layer, network protocols that were designed to help secure traffic. During the heyday of the early internet, https was used on only a few sites where secure use cases were recommended.

But since then, opinions in the security community have shifted on the use of https, for reasons of privacy and secure data. Google, in particular, forced the issue of dropping http, dangling the threat of a dropping search-engine position. Last year, Google Chrome took the step of marking http sites as “not secure,” effectively shaming websites that ignore digital security standards.

At the same time, old security protocols such as SSL and TLS 1.0 have been discontinued in modern browsers, and many newer browsers require stronger types of encryption, such as SHA–2, which means that there are strong deterrents for running a web browser that is too old.

So now all these sites that didn’t have to use https in the past are now using https because if they don’t, it will hurt their traffic and their business. We’ve forced security on millions of websites this way, and the broader internet has benefited greatly from this. It improves digital security in nearly all cases.

But for purposes of preservation, we’ve put in additional layers that prevent correct use of old computers. From a research standpoint, this prevents an easy look at websites in their original context as we’ve forced upgrades to support modern computers while actively discouraging efforts to support older ones.

This leads to situations where the only way to make an old internet-enabled computer even reasonably functional online is to use hacks that allow for a browser with modern security standards … or to switch to an alternate operating system. This is something I ran into earlier this year when I started using a 2005 Mac Mini as part of an experiment. But I’ve also run into it within controlled environments like virtualization, and it made using the operating system unnecessarily hard, as I was unable to easily download things like drivers necessary for my use case.

There are a few die-hards out there that have not given into the https drumbeat. When I load up an old computer or an old browser, the first site I try is Jason Scott’s textfiles.com, because it’s the only one I know I can get to work without any problem. His decision to drop https for that site led him to run into a whole lot of debates online, but it may have been the best thing he could have done for collectors, historians, and preservationists—now we have a place we KNOW will work when we’re just trying to test an old piece of hardware or software, with no bullshit.

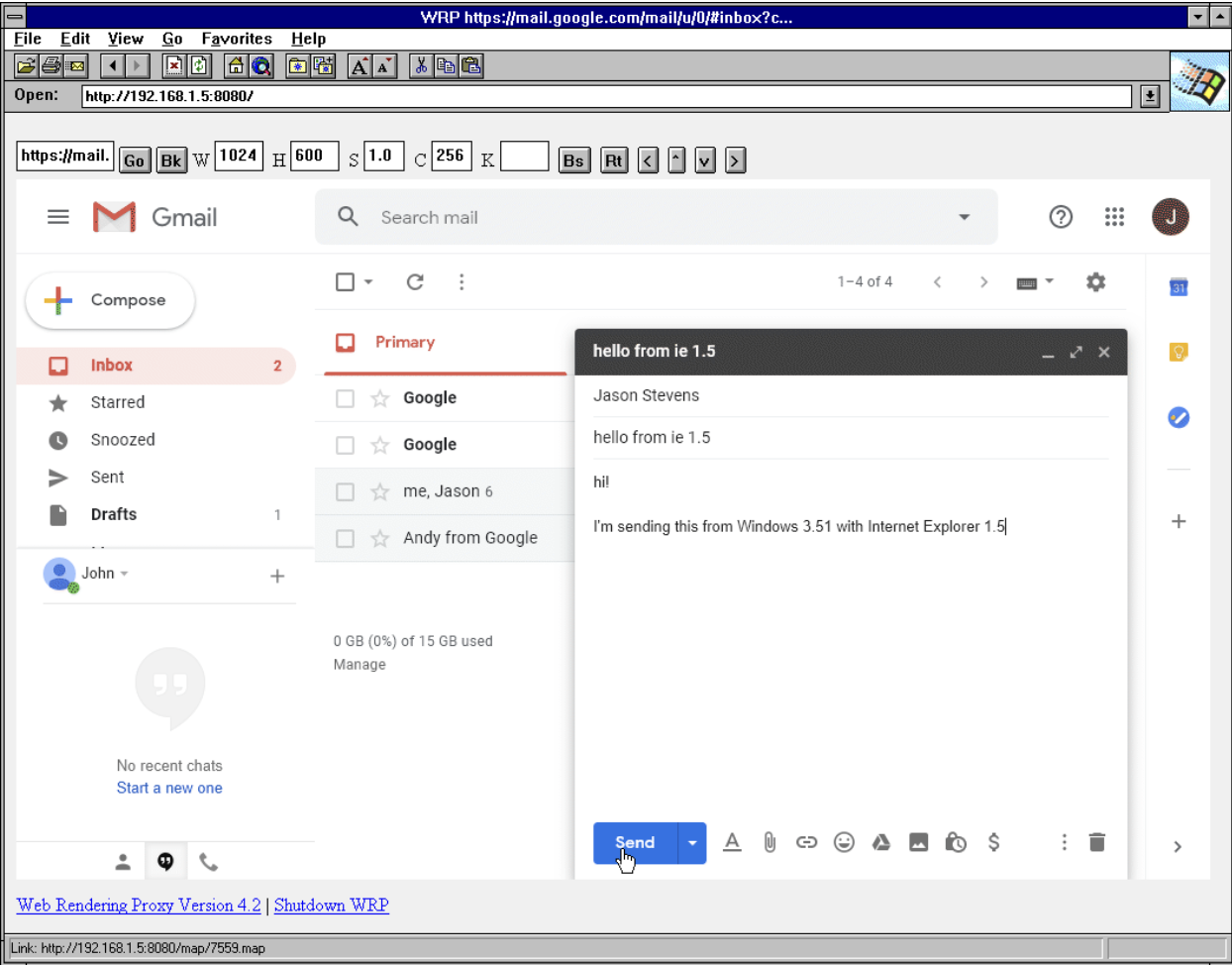

What I would like to see are more attempts to push the new into the old on its own terms, with a great example coming from Google programmer Antoni Sawicki, who built a web-rendering proxy that effectively allows modern websites to run on outdated browsers. It effectively turns the modern website into a giant GIF that then can be used through a proxy layer.

We need more of this. At some point, we’re going to want to collectively come back to these old tools to understand them, but we seem to be doing everything in our power to prevent them from getting online. There needs to be a balance.

We’re starting to close off support for older generations of apps in mainstream software

The most problematic issue that I see is one that’s likely to become worse in the coming years.

And we’re about to get our first real taste of it in a mainstream desktop operating system.

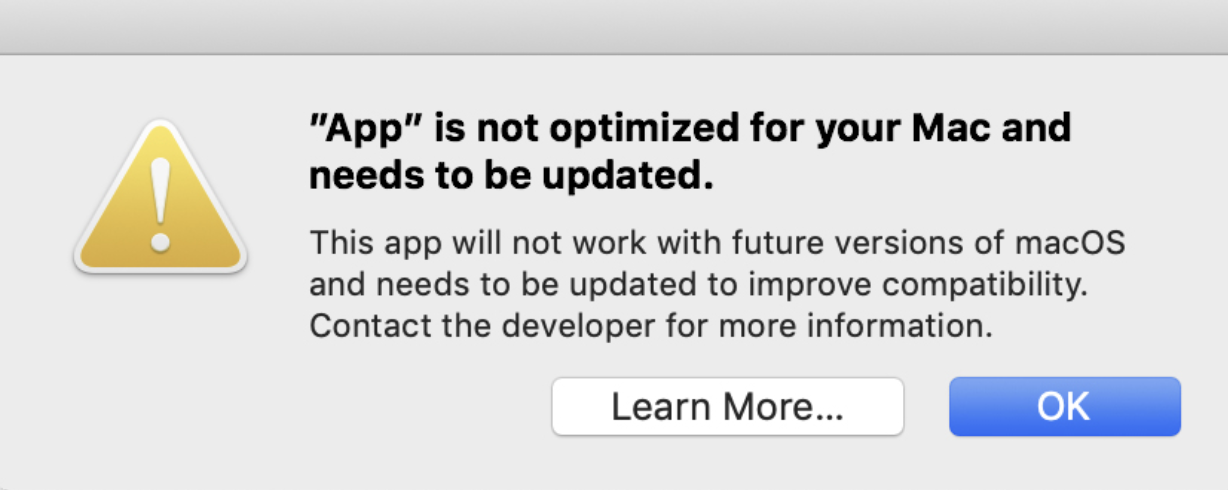

In the coming weeks, Apple is likely to release Catalina, the latest version of macOS, which is going to drop support for all 32-bit apps, meaning that literally decades of work developed for prior versions of MacOS will become unusable.

It’s not the first time Apple has done this—the 2009 release of Mac OS X 10.6, Snow Leopard, formally pulled off the band aid of Power PC support—but the decision to drop support for 32-bit apps is likely going to cut off a lot of tools that are intended to work on as many platforms as possible.

“The technologies that define today’s Mac experience—such as Metal graphics acceleration—work only with 64-bit apps,” Apple explains of its decision on its website. “To ensure that the apps you purchase are as advanced as the Mac you run them on, all future Mac software will eventually be required to be 64-bit.”

(Apple even has a “burn book” of sorts, a configuration file where it specifically lists 235 apps that will no longer work with Catalina. Most are very old versions of software.)

Now, this is great if your goal is to always be on the latest software, but many people run older software, for a variety of reasons. Some of the biggest? The software isn’t being actively developed anymore, you prefer the older version for design and aesthetic reasons, or because it’s something that isn’t developed on a continuous release cycle, like a game.

Now, if this debate was limited to Apple, that would be one thing, but the truth is, they’re just an early adopter. Earlier this summer, Canonical, the company that develops Ubuntu, greatly upset a good portion of their user base after announcing that it was removing support for 32-bit applications, which would have basically made the Linux distribution useless for many games, harming a community that was just starting to warm to Linux. Eventually, Canonical backed down, after it became clear that the developer community was not the same as the user community.

“We do think it’s reasonable to expect the community to participate and to find the right balance between enabling the next wave of capabilities and maintaining the long tail,” Canonical said in a statement.

Now, there are always solutions that would allow the use of vintage or even obsolete software in modern contexts. Virtualization and emulation software like VirtualBox and QEMU create ways to recreate an experience. But these tools are often more technical and less seamless than native platforms they replace, and I worry that nobody is thinking of ways to make them easier for the average person to use.

And, to be fair, there are definitely reasons to discourage the use of 32-bit software. As How-To Geeknotes, most Windows applications are made as 32-bit apps, in part because those have broader support over 64-bit apps, which are more future-proofed. That means a lot of developers aren’t taking advantage of the latest technologies, despite clear advantages.

But as architectures change and technology evolves, I’m convinced that there will be a time that old software loses the battle, all because we chose not to prioritize its long-term value.

We may be nostalgic, but the companies that make our devices quite often are not.

Why make such a big fuss about all this? After all, we know that planned obsolescence has long been a part of our digital lives, and that the cloud is less a permanent state, and more an evolving beast.

But that beast will be hard to document, and ultimately, its story needs to be preserved and interpreted, hopefully in the same context in which it was created. The technology we use today, however, does not allow for that to the degree in which it’s needed. It highlights a dichotomy between the digital information that will be somewhat easy to protect and the physical portals to that data that have been built that will be harder to document because their basic function is tethered to the internet, and that tethering, for one reason or another, will inevitably break.

I’ve touched on this in the past, but I think the problem is actually more serious than I’ve laid out, because we’re barreling away from permanence and towards constant chaos.

In two decades, I can see this scenario playing out: A thirtysomething adult is going to think back one day to his or her days in middle school, and wax nostalgic for that old laptop they used back then. It’s an old Chromebook their parents bought for them, covered in stickers like a Trapper Keeper circa 1984, and it holds memories of a specific time in their lives.

Inevitably, they’ll open it up and try to turn it on … and honestly, it’s not clear what might happen. Perhaps the machine won’t turn on at all, due to TouchPad-like issues with the design of the power supply that require some sort of charge in the battery to function. Maybe they’ll get lucky, and it’ll turn on after they plug it in, even if the battery won’t hold a charge. But then they try to log in … and they find that they can’t log in due to a security protocol change Google strong-armed onto the internet a decade ago. Maybe we won’t use passwords at all anymore, meaning there’s no easy way to log in. Maybe they’ll figure out a way to get around the error screen, only to load up a website, and find that it won’t load, due to the fact that it uses a web standard that wasn’t even invented back then.

Unlike early computers that still make their pleasures functionally accessible with the flip of a switch (and admittedly a recapping and possibly a little retrobrite), this machine, an artifact of someone’s past, could likely become functionally useless.

All because Google decided to stop supporting the machines, despite the fact that they were still perfectly functional, due to some structured plan for obsolescence.

Now apply that thought process to every device you currently own—or owned just a few years ago—and you can see where this is going.

We’re allowing the present to conspire against the past in the name of the future.

We’re endangering nostalgia, something important to the way we see the world even as it’s frequently imperfect, due to technology that at one point was seen as a boon for progress.

We’re making it much harder to objectively document the information in its original context. And the same companies that are forcing us into this brave new world where we’re deleting history as fast as we’re creating it should help us fix it.

Because it will be way too late to do so later.

This article originally appeared on VICE US.